Platforms like Unreal Engine aren’t out on a facial animation prairie laying tracks to hunt buffalo. Nobody is getting drunk in a saloon and shooting it out over who has the better de-aging technology.

This is not the Wild West of facial animation.

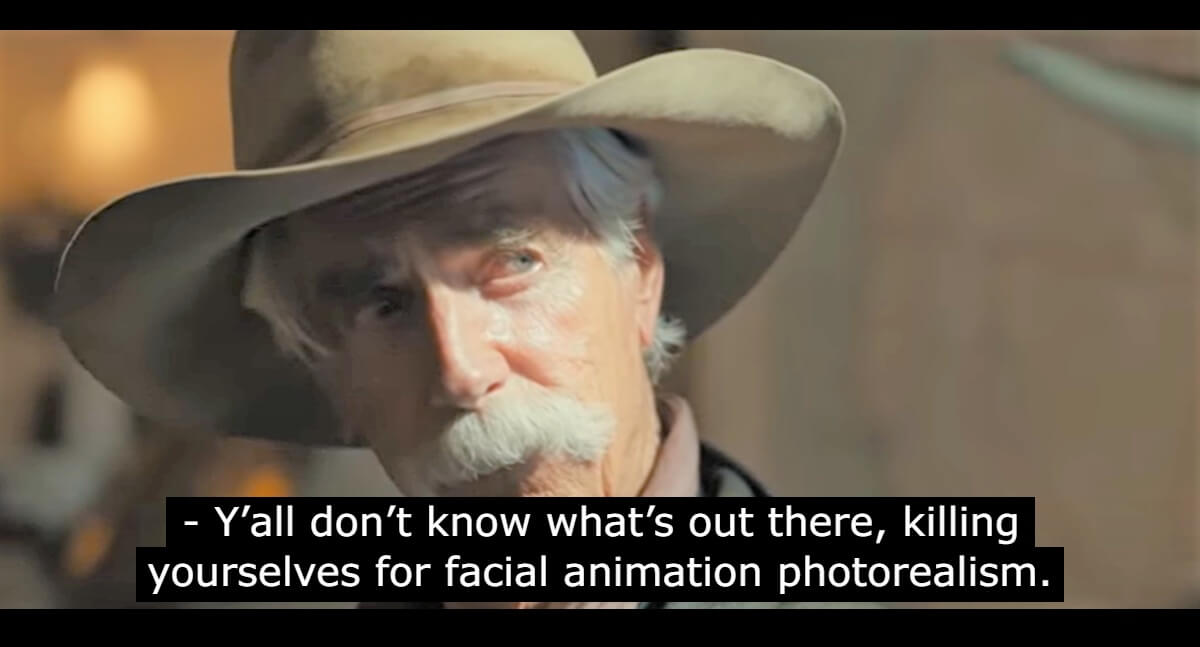

No, If 1980’s Pacman was the birth of facial animation, as gameranx asserted in its “The Evolution of Facial Animation in Video Games” video, then the Wild West would be more like…1993’s Doom. However, transport one of the facial animation frontier folk into the 21st Century of high-rises and flying cars, and they’ll look around and say:

“Nah, this still isn’t good enough.”

Put on your Muck boots and walk into any forum for animation commentary. GamingBolt, a Youtube channel with 1.2 million subscribers, published a July 2022 video about the best facial animation video games. While touting the realism of today’s facial animation, they also made a point to say:

“Video game graphics have certainly gotten impressive over the last couple of decades. For most games, however, facial animations still tend to be a sore point.”

How about looking at The Last of Us 2, released in 2020 and heralded as some of the best facial animation ever? Just two years later in a Reddit thread titled “Ellie’s Facial Animations Are Amazing In The PS5 Remake”, one user encapsulated a common feeling:

Jhogue60: Playing the remake has me playing Part 2 again, and the facial expressions in Part 2 do not hold their own when compared to Part 1 now. It’s wild

The gamer/film lover attitude of “it’s still not good enough” is reflected in the companies working frantically to be the best in facial animation. Like the railroad companies laying tracks, or White Star Line creating the Titanic, everybody is striving to get there first. Here are a few of the 2023 developments in the quest for dominance in facial animation.

Metahuman Creator announces the release of Metahuman Animator for high-fidelity performance capture

Photo credit: Unreal Engine

The initial Metahuman Creator launch occurred in the spring of 2021 and immediately allowed creators to mold humans with realistic hair, complexions, teeth, and body types. The first major plug-in release by Epic for Metahuman Creator was Mesh to Metahuman, which allowed the creator to play God with sculpted, scanned, or modeled custom rigs.

Epic announced that the next step in facial animation innovation is the release of Metahuman Animator in the summer of 2023. The facial animation tool will provide the user with a streamlined method of performance capture by allowing the creator to complete a scan with their iPhone and a simple tripod. The Metahuman Animator teaser video appears to show a complete fluidity between the actor’s expressions and their translation to animation:

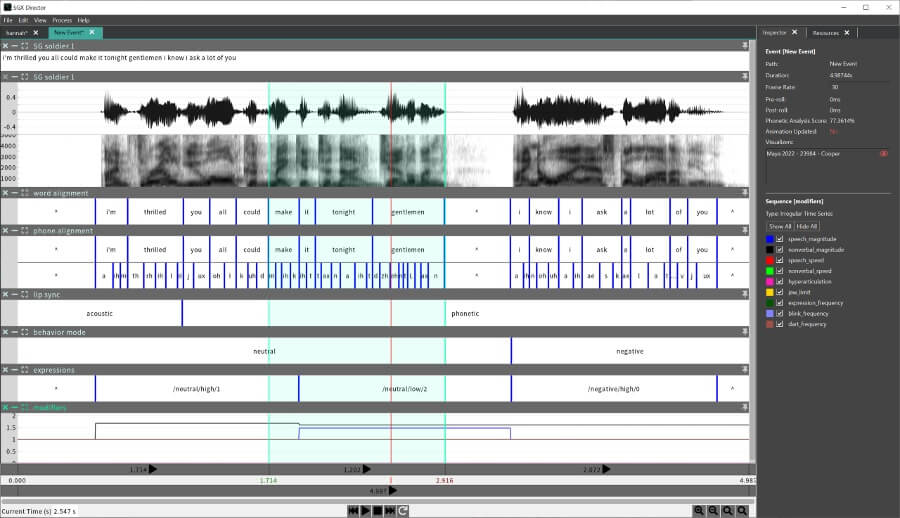

Speech Graphics co-founder and CTO Michael Berger tells 80 Level about the upcoming premiere of the upgraded SGX Director tool

The SGX Director console. Photo credit: Speech Graphics

80 Level recently published a deep-dive Speech Graphics profile piece that put Speech Graphics co-founder and CTO Michael Berger on the answering end of some facial animation questions. Part of what has brought Speech Graphics even greater fame in 2023 (after working on games like “The Last of Us 2”) is their contributions to the noticeable lip-syncing success of “Hogwarts Legacy”.

Berger articulated in depth about their technological processes and what’s upcoming at the 2023 Game Developer’s Conference (GDC). He expressed Speech Graphic’s mission from the beginning:

Video game developers are some of the hardest customers to please because they are in a constant arms race over realism. Our mission was to fully automate high-quality animation, including lip sync, emotional and other non-verbal facial expressions, head motion, blinks, etc. We advanced our analyses to extract more and more information from the audio signal, and advanced our models to produce more naturalistic movements, to the point where we were really helping our customers.

He went on to describe the upcoming release of the upgraded SGX Director tool, which will premiere at the 2023 GDC:

SGX Director gives users direct control over animation performances by exposing key metadata that gets created during SGX processing. By editing the metadata on an interactive timeline users will see immediate changes in the animation. For example, you can move word boundaries, change behavior modes, swap facial expressions, or tweak modifiers such as intensity, speed, and hyperarticulation of muscles.

Berger went on to describe the new upgraded releases of lip-syncing for Japanese, Mandarin, and Korean, and improving their lip-sync tech to enunciate the emotion in non-verbal grunts, laughs, coughs, and other sounds like a cry of pain.

All of these advancements spoke to a common thread: the evolution toward complete realism is happening at an astonishing speed.

MARZ releases its Vanity AI aging and de-aging pipeline that is 300x faster than a traditional pipeline

That’s not a typo. We covered the Vanity AI release back in January when it came out. The Vanity AI tool gives the creator freedom to complete high-end 2D aging, de-aging, and wig, cosmetic, and prosthetic alterations in a matter of minutes. A 4-8 hour cosmetic shot now takes one minute, according to MARZ.

Over two dozen major studios have used the pipeline, including Netflix’s Cabinet of Curiosities and Disney Plus’s WandaVision.

What about the backlash of “too much realism”?

There’s the long-studied phenomenon of the “uncanny valley”. The solution to this: create humans so well that no one can tell the difference.

Regardless of how much humans praise a piece of facial animation, there’s still the “style versus reality” back-and-forth. A good example of this is to revisit “The Last of Us” as a kind of case study.

When “The Last of Us 2” came out in 2020, its facial animation was universally praised with adjectives like “staggeringly realistic” and “like nothing anyone has ever seen in games”. Just over two years later, “The Last of Us Part I” remake was released for PS5, and here’s where arguments against photorealism spring up. One of the more scathing perspectives came from a June 2022 article from TheGamer called “The Last of Us Remake’s Approach To Realism Is Its Biggest Problem”. The perspective is summarized well in one paragraph:

You can’t deny that it looks fantastic, but in shooting for a more realistic rendition of these characters – especially ones who weren’t present in the sequel like Tess – the upcoming remake removes much of the graphical flair that made it so special in the first place. Colours are significantly more muted, facial structure is changed to an extent that some characters are almost unrecognisable, and vocal performances are twisted into something they were never intended to be through new facial capture that will dilute the original emotional resonance.

One heavily shared Youtube video made by ElAnalistaDeBits compared the graphics of the original with the remake in side-by-side shots:

Here’s a summary: the remake had thicker moss, more defined environmental elements, and more robust facial features like color gradients and freckles and creased skin. It’s up to the viewer to decide if the original emotional resonance is now altered or if the emotional resonance is now simply filtered through a much sharper lens.

Stylistic interpretation versus photorealism: the real question is did it make the viewer connect?

A side-by-side of the original “The Last of Us” versus “The Last of Us Part I”.

The artistic debate about what has greater merit is ever-present: an animator’s stylistic interpretation of their facial animation or the degree of photorealism. Whether it’s Guillermo del Toro’s representation of Pinocchio or “The Last of Us” striving towards facial perfection – the question that matters at the core is: were the characters able to make you feel something?

Judging the flurry of technological advancements, you could say these philosophical questions don’t matter. The quest is to explore and claim every last pocket of the rugged facial animation landscape. It’s happening in real-time and fast, as companies drive closer to the end of the frontier. The only question that remains is:

What happens when they reach the coast?